Persistent Compute Objects, or picos, are tools for modeling the Internet of Things. A pico represents an entity–something that has a unique identity and a long-lived existence. Picos can represent people, places, things, organizations, and even ideas.

Motiovation

Personal data

Table of Contents

Table of Contents maxLevel 1

...

Picos are at the heart of the pico system. Other pieces of the system either support or interact with picos to produce an active model.

The Evert Query API

One of the first things to get out of the way: the pico API is not RESTful. Picos don't present a collection of resources to be manipulated by GET, POST, PUT, and DELETE. The API does rely on HTTP transport, but it we also have an SMTP transport for events. So HTTP and it's methods are just a transport for the pico API. If you've gotten REST as a religion, this may seem like sacrilege, but it's really a matter of using the right API for the job. RESTful APIs are great for request-response style interaction, but not so good for the evented interactions that picos support.

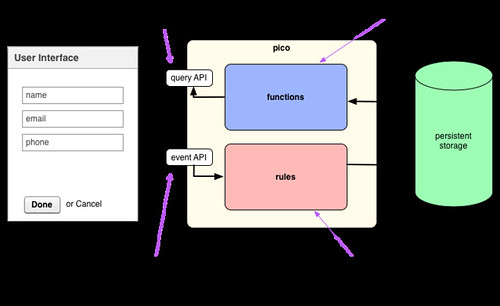

Instead, the pico API more closely follows the Command Query Separation (CQS) pattern, although there are no "commands" as such. Rather there are events. So, we might call it an event-query model. CQS provides for Command Query Responsibility Segregation. What this means is that the code stack that supports handling queries can be separated from code that supports modifying system state. Picos do this naturally as we'll see below.

Second, as I pointed out in Protocols and Metaprotocols, the pico API is really a meta-API in that describes the pattern for the API rather than the specific API itself. Put another way, every pico exposes a unique API depending on what rulesets have been installed in it.

The Sky Event API describes the event API pattern for a pico including what components are important and how those components are encoded in an HTTP method (GET or POST). The specific API for a given pico, however, depends on which rules are installed since it is rules that respond to events. Because of the event expressions in select statements, we can calculate the specific events to which a given ruleset responds. This is called salience data. This is similar to the way that RMI uses Java classes to determine the specification for the object method interactions to which a particular Java class will respond.

Similarly, the Sky Cloud API defines the patterns for queries that a pico understands. Again this is a meta-API since the queries that any given pico responds to depend on themodules installed. Queries are thus implementable with different code than the event processing and, in practice, tend to be much faster.

The following diagram shows the event-query model and how applications use it.

In the systems we're building now, picos don't get created as empty vessels waiting for rulesets and modules to be installed and their API to be defined. Instead, we use CloudOS to create picos of specific types and they come pre-loaded with rulesets that implement CloudOS and other services. Thus, when you create a pico to represent a vehicle, it comes with the CloudOS event-query API as well as rulesets that provide an event-query API for Fuse along with a pre-defined data schema. This makes picos very powerful and gives developers significant leverage.

The event-query API model is a significant paradigm shift for Web developers. You have to throw out some of your old assumptions and remember, you're not building a Web application or using a RESTful API. A better analogy is to think of picos as cloud-based, persistent objects and model your application in the same way you would in an object-oriented programming language. In return you'll be rewarded with a different and interesting way to build Internet applications that scale well, can be distributed across multiple domains, and put users in control of their data.

State

Event Loops

Each pico presents an event loop that handles events sent to the pico according to the rulesets that are installed in it. The following diagram shows the five phases of event evaluation. Note that evaluation is a cycle like any interpreter. The event represents the input to the interpreter that causes the cycle to happen. Once that event has been evaluated, the pico waits for another event.

...

Once the response has been returned, the pico waits for another event.

KRL

KRL Rulesets execute inside a persistent compute object, or pico.

While rulesets are the primary organizing feature of KRL, you cannot program KRL without understanding picos.

Rulesets

CloudOS

Applications

PCAA

We have been using the model extensively for the last 6 months and have found it to be very effective for building CloudOS applications. I wrote about a largish experiment with this model in the whitepaper Introducing Forever: Personal Cloud Application Architectures. The white paper describes an application, called Forever, that uses picos to represent a social graph for purposes of creating an evergreen address book. The interface is built in an unhosted style using nothing but JavaScript. The picos provide the business logic and persistence layer. We called this the "personal cloud application architecture" or PCAA.

I wrote a simple Todo list application that used picos to store and manage todo list items do illustrate the simple calls that could be used to merely store and retrieve data from a pico's PDS in a simple PCAA. I describe that example in Building an App Using the Personal Cloud Application Architecture.

Developers building applications using the unhosted, PCAA style can sometimes get away without really thinking too much about the underlying model, but sophisticated applications will require a more detailed understanding. Developers programming picos to implement a particular system, of course, will need to be skilled at event-query systems in order to implement an effective and easy-to-use API. We're still developing our knowledge of how they work, how best to document them, and the best ways to promulgate our findings.

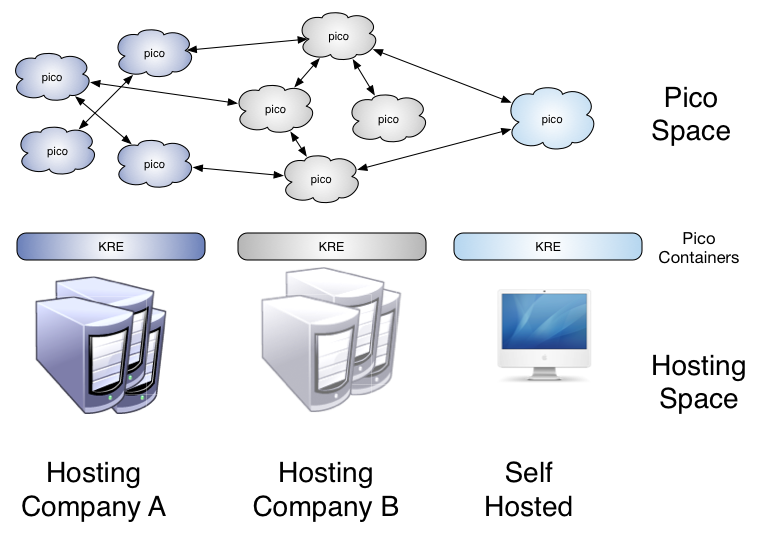

KRE

Pico Hosting Model