Persistent Compute Objects, or picos, are tools for modeling the Internet of Things. A pico represents an entity—something that has a unique identity and a long-lived existence. Picos can represent people, places, things, organizations, and even ideas.

The motivation for picos is to design infrastructure to support the Internet of Things that is decentralized, heterarchical, and interoperable. These three characteristics are essential to a workable solution and are sadly lacking in our current implementations.

Without these three characteristics, it's impossible to build an Internet of Things that respects people's privacy and independence.

Table of Contents

Table of Contents maxLevel 1

A summary is located at Picos: Persistent Compute Objects

Pico Building Blocks

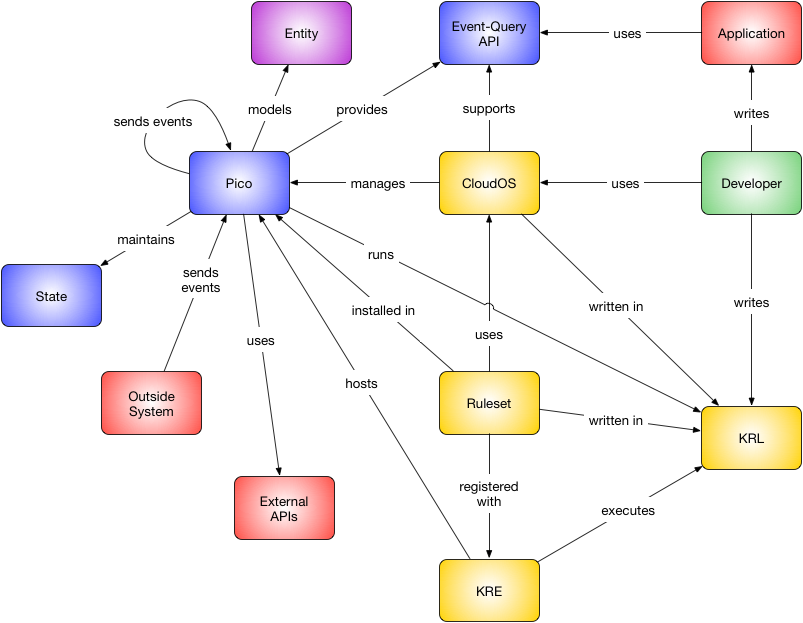

The following sections will discuss the various concepts in the pico ecosystem. The following diagram shows the relationship and interactions between between those concepts.

Picos

Picos are:

- persistent: They exist from when they are created until they are explicitly deleted. Picos retain state based on past operations.

- unique: They have an identity that is immutable. While attributes of the pico, its state, may change, its identity does not.

- online: They are available on the Internet and respond to events and queries.

- concurrent: They operate independently of one another and process events and queries asynchronously.

- event-driven: They respond to events by changing state and sending new events.

- rule-based: Their behavior is expressed as rules that pattern-match against incoming events. Put another way, rules listen for events on the pico's internal event bus.

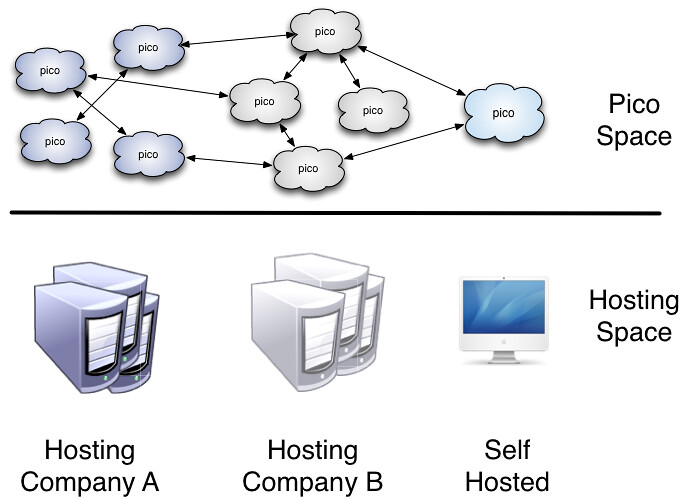

Picos Collections of picos are used to create models of interacting entities in the Internet of Things. Picos communicate by sending events to or making requests of each other other in an Actor-like manner.

Picos are at the heart of the pico system. Other pieces of the system either support or interact with picos to produce an active model.

The Evert Query API

One of the first things to get out of the way: the pico API is not RESTful. Picos don't present a collection of resources to be manipulated by GET, POST, PUT, and DELETE. The API does rely on HTTP transport, but it we also have an SMTP transport for events. So HTTP and it's methods are just a transport for the pico API. If you've gotten REST as a religion, this may seem like sacrilege, but it's really a matter of using the right API for the job. RESTful APIs are great for request-response style interaction, but not so good for the evented interactions that picos support.

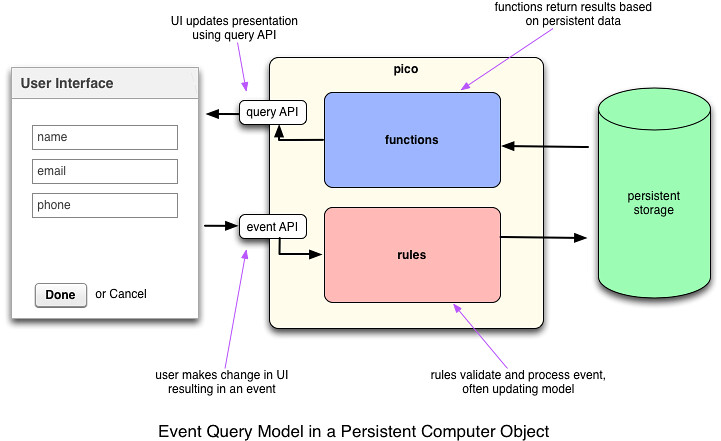

Instead, the pico API more closely follows the Command Query Separation (CQS) pattern, although there are no "commands" as such. Rather there are events. So, we might call it an event-query model. CQS provides for Command Query Responsibility Segregation. What this means is that the code stack that supports handling queries can be separated from code that supports modifying system state. Picos do this naturally as we'll see below.

Second, as I pointed out in Protocols and Metaprotocols, the pico API is really a meta-API in that describes the pattern for the API rather than the specific API itself. Put another way, every pico exposes a unique API depending on what rulesets have been installed in it.

The Sky Event API describes the event API pattern for a pico including what components are important and how those components are encoded in an HTTP method (GET or POST). The specific API for a given pico, however, depends on which rules are installed since it is rules that respond to events. Because of the event expressions in select statements, we can calculate the specific events to which a given ruleset responds. This is called salience data. This is similar to the way that RMI uses Java classes to determine the specification for the object method interactions to which a particular Java class will respond.

Similarly, the Sky Cloud API defines the patterns for queries that a pico understands. Again this is a meta-API since the queries that any given pico responds to depend on themodules installed. Queries are thus implementable with different code than the event processing and, in practice, tend to be much faster.

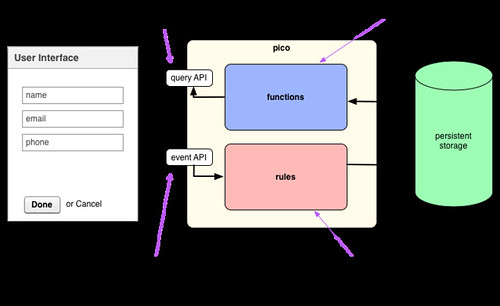

The following diagram shows the event-query model and how applications use it.

In the systems we're building now, picos don't get created as empty vessels waiting for rulesets and modules to be installed and their API to be defined. Instead, we use CloudOS to create picos of specific types and they come pre-loaded with rulesets that implement CloudOS and other services. Thus, when you create a pico to represent a vehicle, it comes with the CloudOS event-query API as well as rulesets that provide an event-query API for Fuse along with a pre-defined data schema. This makes picos very powerful and gives developers significant leverage.

The event-query API model is a significant paradigm shift for Web developers. You have to throw out some of your old assumptions and remember, you're not building a Web application or using a RESTful API. A better analogy is to think of picos as cloud-based, persistent objects and model your application in the same way you would in an object-oriented programming language. In return you'll be rewarded with a different and interesting way to build Internet applications that scale well, can be distributed across multiple domains, and put users in control of their data.

State

Event Loops

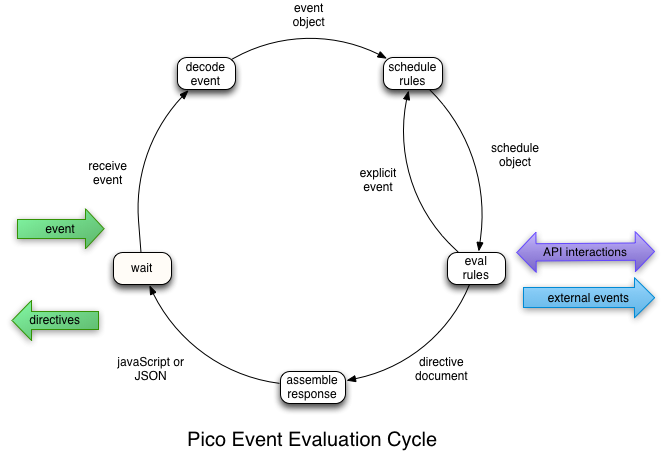

Each pico presents an event loop that handles events sent to the pico according to the rulesets that are installed in it. The following diagram shows the five phases of event evaluation. Note that evaluation is a cycle like any interpreter. The event represents the input to the interpreter that causes the cycle to happen. Once that event has been evaluated, the pico waits for another event.

We'll discuss the five stages in order.

Wait

The wait phase is where picos spend more of their time. For efficiency sake, the pico is suspended during the wait phase. When an event is received KRE (Kinetic Rules Engine) wakes the pico up and begins executing the cycle. Unsuspending a pico is a very lightweight operation.

Decode Event

The decode phase performs a simple task of unpacking the event from whatever method was used to transport it and putting it in a standard RequestInfo object. TheRequestInfo object is used for the remainder of the event evaluation cycle whenever information about the event is needed.

While most events, at present, are transported over HTTP via the Sky Event API, that needn't be the case. Events can be transported via any means for which a decoder exists. In addition to Sky Event, there is also support for an SMTP transport called Sky Mail. Other transports (e.g. XMPP, RabbitMQ, etc.) could be supported with minimal effort.

Schedule Rules

The rule scheduling phase is very important to the overall operation of the pico since building the schedule determines what will happen for the remainder of the event evaluation cycle.

Rules are scheduled using a salience graph that shows, for any given event domain and event type, which rules are salient. The event in the RequestInfo object will have a single event domain and event type. The domain and type are used to look up the list of rules that are listening for that event domain and type combination. Those rules are added to the schedule.

The salience graph is calculated from the rulesets installed in the pico. Whenever the collection of rulesets for a pico changes, the salience graph is recalculated. There is a single salience graph for each pico. The salience graph determines for which events a rule is listening by using the rule's event expression.

Rule order matters within a ruleset. KRE ensures that rules appear in the schedule in the order they appear in the ruleset. No such ordering exists for rulesets, however, so there is no guarantee that rules from one ruleset will be evaluated before or after those of another unless the programmer takes explicit steps to ensure that they are (see the discussion of explicit events below).

The salience graph creates an event bus for the pico, ensuring that as rulesets are installed their rules are automatically subscribed to the events for which they listen.

Rule Evaluation

The rule evaluation phase is where the good stuff happens, at least from the developer's standpoint. The engine runs down the schedule, picking off rules one by one, evaluating the event expression to see if that rule is fired and then, if it is, executing the rule. Note that a rule can be one the schedule because it's listening for an event, but still not be selected because it's event expression doesn't reach a final state. There might be other event that have to be raised before it is complete.

Four purposes of understanding the event evaluation cycle, most of what happens in rule execution is irrelevant. The exception is the raise statement in the rule's postlude. The raise statement allows developers to raise an event as one of the results of the rule evaluation. Raising explicit events is a powerful tool.

From the standpoint of the event evaluation cycle, however, explicit events are a complicating factor because they modify the schedule. Explicit events are not like function calls or actions because they do not represent a change in the flow of control. Instead, an explicit event causes the engine to modify the schedule, possibly appending new rules. Once that has happened rule execution takes up where it left off in the schedule. The schedule is always evaluated in order and new rules are always simply appended. This means that all the rules that were scheduled because of the original event will be evaluated before any rules schedule because of explicit events. Programmers can also use event expressions to order rule evaluation.

If the rule makes a synchronous call to an external API, rule execution waits for the external resource to respond. If a rule sends an event to another pico, that sets off another independent event evaluation cycle, it doesn't modify the schedule for the cycle execution the event:send(). Inter-pico events are sent asynchronously by default.

Assembling the Response

The final response is assembled from the output of all the rules that fired. The idea of an event having a response is unusual. For KRE it's a historic happenstance that has proven useful. Events raised asynchronously never have responses. For events raised synchronously, the response is most useful as a way to ensure the event was received and processed. But the response can have real utility as well.

Historically, KRE returned JavaScript as the result of executing rules. That has been expanded so that the result can be JSON or other correctly mime-typed content. This presents challenges for the engine since rules could be written by many different developers and yet there can be only one result type.

Presently the engine handles this by assuming that any response to an event with the domain web will be JavaScript and otherwise be a directive document (JSON with a specific schema). This suits for many purposes, but doesn't admit raw responses such as images or even just a JSON doc that isn't a directive. The engine tries to put a correctly formatted response together as best it can, but more work is needed, especially in handling raw responses.

This isn't usually a problem because the semantics of a particular event usually imply a specific kind of response (much as we've determined up front that JavaScript is the correct response for events with a web domain). Over time, I expect more and more events will be raised asynchronously and the response document will become less important.

Waiting...Again

Once the response has been returned, the pico waits for another event.These communications are point-to-point and every pico can have a unique address, shared by no one else, to any other pico to which it communicates. Collections of picos were used in architecting the Fuse connected-car system with significant advantage.

Pico Building Blocks

Picos are part of a system that supports programming them. While you can imagine different implementations that support the characteristics of picos enumerated in the previous section, this post will describe the implementation and surrounding ecosystem that I and others have been building for the past seven years.

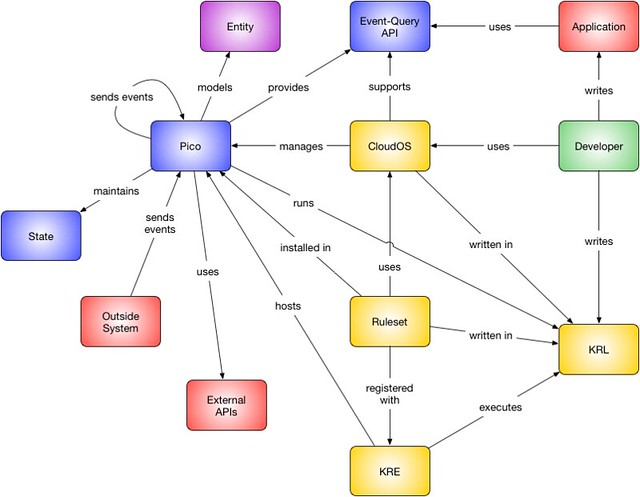

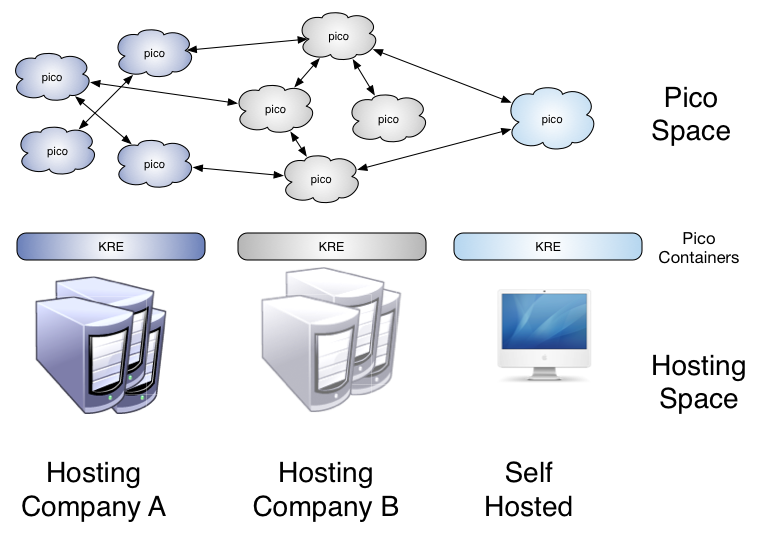

The various pieces of the pico ecosystem and their relationship is shown in the following diagram (click for enlarged diagram).

For people who've read this blog, many of the titles in these boxes will be familiar, but I suspect that the exact nature of how they relate to each other has been a mystery in many cases. Here are some brief descriptions of the primary components and some explanation of the relationships.

Event-Query API

The event-query API is a name I gave the style of interaction that picos support. Picos don't implement RESTful APIs. They aren't meant to. As I explain in Pico APIs: Events and Queries, picos are primarily event-driven but also support a query API for getting values from the pico. Each pico has an internal event-bus. So while picos interact with each other and the world in a point-to-point Actor model, internally, they distribute events with a publish and subscribe mechanism. More

Applications

Picos use the event-query API to communicate with each other. So do applications using a programming style called the pico application architecture (formerly the personal cloud application architecture). The PAA is a variant on an architecture that is being promoted as unhosted web apps and remotestorage. PAA goes beyond those models by offering a richer API that includes not just storage, but other services that developers might need. In fact the set of services is infinitely variable in each pico.

CloudOS

In the same way that operating systems provide more complex, more flexible services for developers than the bare metal of the machine, CloudOS provides pico programmers with important services that make picos easier to use and manage. For example, CloudOS provides services for creating new picos and creating communication channels between picos.

Note: I don't really like the name CloudOS, but it's all I've got for now. If you have ideas, I'm open to them so long as they are not "pico os" or "POS."

Rulesets

The basic module for programming picos is a ruleset. A ruleset is a collection of rules that respond to events. But a ruleset is more than that. Functions in the ruleset make up the queries that are available in the event query API. Thus, the specific event-query API that a given pico presents to the world correlates exactly to the rulesets that are installed in the pico.

The following diagram shows the rules and functions in a pico presenting an event-query API to an application.

CloudOS provides functionality to installing rulesets in a pico and they can change overtime just as the programs installed on a computer change over time. As the installed rulesets change, so does the pico's API.

KRL

KRL is the language in which rulesets are programmed. Picos run KRL using the event evaulation cycle. Rules in KRL are "event-condition-action" rules because they tie together an event expression, a condition, and an action. Event expressions are how rules subscribe to specific events on the pico's event bus. KRL supports complex, declarative event expressions. KRL also supports persistent variables, which is how developers access the pico's state. KRL developers do not need a database to store attributes for the pico because of persistent variables.

KRE

KRE is a host or container engine for picos. A given instance of KRE can host any number of picos. KRE is the engine that makes picos work. KRE is an open source project, hosted on Github.

KRL rulesets are hosted online. Developers register the URL with KRE to create a ruleset ID or RID. The RID is what is installed in the pico. When the pico runs, it gets the ruleset source, parses it, optimizes it, and executes it.

The diagram below shows an important property of pico hosting. Picos can have communication relationships with each other even though they are hosted on different instances of KRE. The KRE instances need have no specific relationship with each other for picos to interact.

This hosted model is important because it provides a key component of ensuring that picos can run everywhere, not only in one organization's infrastructure.

Conclusion

Picos present a powerful model for how a decentralized, heterarchical, interoperable Internet of Things can be built. Picos are built on open-source software and support a unbiased hosting model for deployment. They have been used to build and deploy several production systems, including the Fuse connected-car system. They provide the means for giving people direct, unintermediated control of their personal data and the devices that are generating it.

I invite your questions and participation.

KRL

KRL Rulesets execute inside a persistent compute object, or pico.

While rulesets are the primary organizing feature of KRL, you cannot program KRL without understanding picos.

...

Persistent Data Variables

Rulesets

CloudOS

Operating systems provide significant benefits to personal computers:

...

An operating system can provide the same benefits to personal clouds. Because it allows you to act as a peer, a CloudOS orchestrates and coordinates online interactions, enables cooperating networks of products and services, supports intention-driven automation, and transforms the way you interact with the world.

Applications

PCAA

We have been using the model extensively for the last 6 months and have found it to be very effective for building CloudOS applications. I wrote about a largish experiment with this model in the whitepaper Introducing Forever: Personal Cloud Application Architectures. The white paper describes an application, called Forever, that uses picos to represent a social graph for purposes of creating an evergreen address book. The interface is built in an unhosted style using nothing but JavaScript. The picos provide the business logic and persistence layer. We called this the "personal cloud application architecture" or PCAA.

...

Developers building applications using the unhosted, PCAA style can sometimes get away without really thinking too much about the underlying model, but sophisticated applications will require a more detailed understanding. Developers programming picos to implement a particular system, of course, will need to be skilled at event-query systems in order to implement an effective and easy-to-use API. We're still developing our knowledge of how they work, how best to document them, and the best ways to promulgate our findings.

KRE

Pico Hosting Model